1.19.0版本安装步骤

1.19.0版本安装步骤

安装前置条件:

- 任意节点 centos 版本为 7.6 / 7.7 或 7.8

- 任意节点 CPU 内核数量大于等于 2,且内存大于等于 4G

- 任意节点 hostname 不是 localhost,且不包含下划线、小数点、大写字母

- 任意节点都有固定的内网 IP 地址

- 任意节点都只有一个网卡,如果有特殊目的,可以在完成 K8S 安装后再增加新的网卡

- 任意节点上Kubelet使用的 IP 地址 可互通(无需 NAT 映射即可相互访问),且没有防火墙、安全组隔离

- 任意节点不会直接使用 docker run 或 docker-compose 运行容器

# 准备工作:

- 设置主机名

- 各服务器时间同步

- 上传kube1.19.0.tar.gz

- 主从安装

- 从节点加入主节点

- 验证

- k8s 命令自动补全设置

- 安装k8s仪表盘Kuboard

# 1.设置各节点主机名

主节点:

hostnamectl set-hostname master

echo "127.0.0.1 $(hostname)" >> /etc/hosts

从节点1:

hostnamectl set-hostname node1

echo "127.0.0.1 $(hostname)" >> /etc/hosts

从节点2:

hostnamectl set-hostname node2

echo "127.0.0.1 $(hostname)" >> /etc/hosts

设置完成之后断开Xshell重新连接即可

断开:Alt+c

连接:Ctrl+shift+r

1

2

3

4

5

6

7

8

9

10

11

12

2

3

4

5

6

7

8

9

10

11

12

# 2.各节点时间校准

各服务器执行date查看时间是否有差异

yum install ntp -y

ntpdate cn.pool.ntp.org

1

2

3

2

3

# 3.上传tar安装包

安装包链接 (提取码:1314)

[root@node2 home]# ll

-rw-r--r-- 1 root root 621410419 10月 9 17:07 kube1.19.0.tar.gz

[root@node2 home]# pwd

/home

[root@node2 home]#

1

2

3

4

5

2

3

4

5

# 4.解压安装主从

tar -zxvf kube1.19.0.tar.gz

master上: cd shell && sh init.sh && sh master.sh

node上:cd shell && sh init.sh

1

2

3

2

3

# 5.从节点加入主节点

master上生成token:

[root@master shell]# kubeadm token create --print-join-command

W1009 17:15:31.782757 37720 configset.go:348] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

kubeadm join 192.168.0.90:6443 --token ul68zs.dkkvpwfex9rpzo0d --discovery-token-ca-cert-hash sha256:3e3ee481f5603621f216e707321aa26a68834939e440be91322c62eb8540ffce

node上加入集群:

[root@node1 shell]# kubeadm join 192.168.0.90:6443 --token ul68zs.dkkvpwfex9rpzo0d --discovery-token-ca-cert-hash sha256:3e3ee481f5603621f216e707321aa26a68834939e440be91322c62eb8540ffce

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING FileExisting-socat]: socat not found in system path

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

# 6.验证集群pod是否running,各节点是否ready

[root@master shell]# watch kubectl get pod -n kube-system -o wide

Every 2.0s: kubectl get pod -n kube-system -o wide Fri Oct 9 17:45:03 2020

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-5d7686f694-94fcc 1/1 Running 0 48m 100.89.161.131 master <none> <none>

calico-node-42bwj 1/1 Running 0 48m 192.168.0.90 master <none> <none>

calico-node-k6k6d 1/1 Running 0 27m 192.168.0.189 node2 <none> <none>

calico-node-lgwwj 1/1 Running 0 29m 192.168.0.68 node1 <none> <none>

coredns-f9fd979d6-2ncmm 1/1 Running 0 48m 100.89.161.130 master <none> <none>

coredns-f9fd979d6-5s4nw 1/1 Running 0 48m 100.89.161.129 master <none> <none>

etcd-master 1/1 Running 0 48m 192.168.0.90 master <none> <none>

kube-apiserver-master 1/1 Running 0 48m 192.168.0.90 master <none> <none>

kube-controller-manager-master 1/1 Running 0 48m 192.168.0.90 master <none> <none>

kube-proxy-5g2ht 1/1 Running 0 29m 192.168.0.68 node1 <none> <none>

kube-proxy-wpf76 1/1 Running 0 27m 192.168.0.189 node2 <none> <none>

kube-proxy-zgcft 1/1 Running 0 48m 192.168.0.90 master <none> <none>

kube-scheduler-master 1/1 Running 0 48m 192.168.0.90 master <none> <none>

[root@master shell]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 22m v1.19.0

node1 Ready <none> 2m17s v1.19.0

node2 Ready <none> 24s v1.19.0

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

# 7.k8s 命令自动补全设置(可选)

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

1

2

3

4

2

3

4

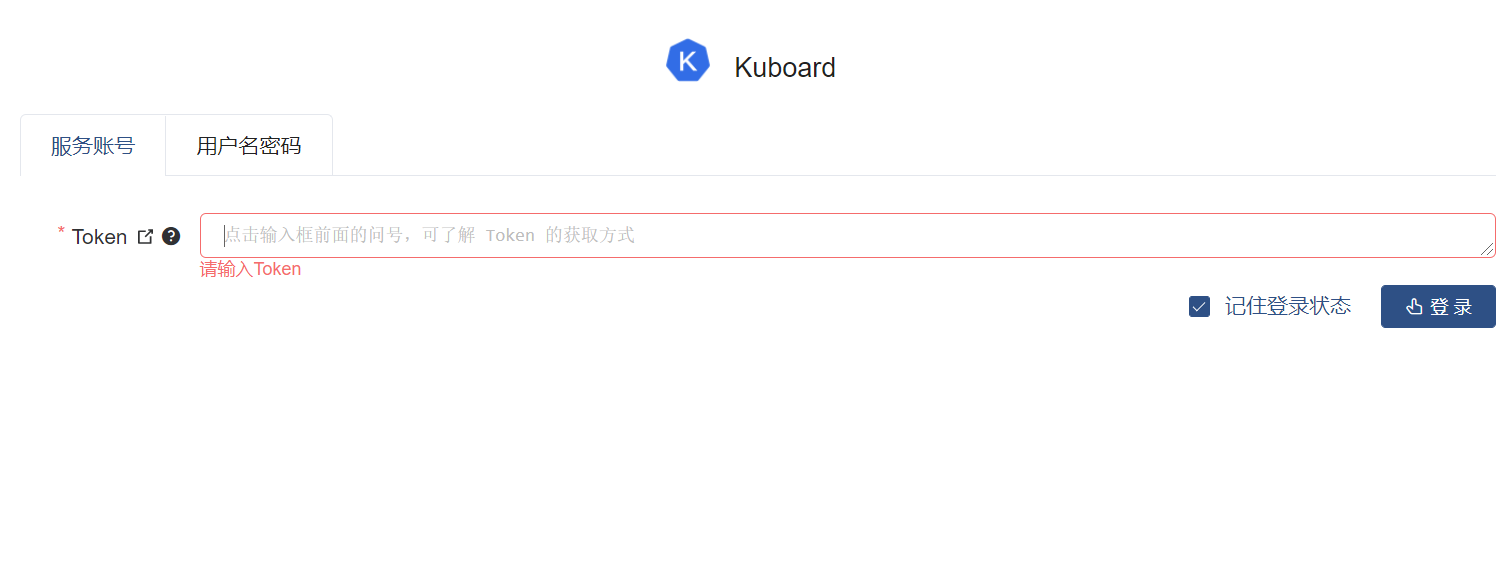

# 8.安装k8s仪表盘Kuboard

[安装包链接]( 提取码:1314 )

在 Kubernetes 集群的某一个节点上执行装载准备好的镜像

docker load < kuboard.tar

docker images

为镜像重新添加标签

docker tag 23b68e80aa82 eipwork/kuboard:latest

docker images

准备好的kuboard-offline.yaml文件

修改该文件中第 26 行的节点名称为上一个步骤中,已经加载了 kuboard 镜像的节点

vim kuboard-offline.yaml

1

2

3

4

5

6

7

8

9

2

3

4

5

6

7

8

9

apiVersion: apps/v1

kind: Deployment

metadata:

name: kuboard

namespace: kube-system

annotations:

k8s.kuboard.cn/displayName: kuboard

k8s.kuboard.cn/ingress: "true"

k8s.kuboard.cn/service: NodePort

k8s.kuboard.cn/workload: kuboard

labels:

k8s.kuboard.cn/layer: monitor

k8s.kuboard.cn/name: kuboard

spec:

replicas: 1

selector:

matchLabels:

k8s.kuboard.cn/layer: monitor

k8s.kuboard.cn/name: kuboard

template:

metadata:

labels:

k8s.kuboard.cn/layer: monitor

k8s.kuboard.cn/name: kuboard

spec:

nodeName: node2

containers:

- name: kuboard

image: eipwork/kuboard:latest

imagePullPolicy: IfNotPresent

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

apiVersion: v1

kind: Service

metadata:

name: kuboard

namespace: kube-system

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

nodePort: 32567

selector:

k8s.kuboard.cn/layer: monitor

k8s.kuboard.cn/name: kuboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: kuboard-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kuboard-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kuboard-user

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: kuboard-viewer

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kuboard-viewer

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: view

subjects:

- kind: ServiceAccount

name: kuboard-viewer

namespace: kube-system

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

启动仪表板

[root@master home]# kubectl apply -f kuboard-offline.yaml

deployment.apps/kuboard created

service/kuboard created

serviceaccount/kuboard-user created

clusterrolebinding.rbac.authorization.k8s.io/kuboard-user created

serviceaccount/kuboard-viewer created

clusterrolebinding.rbac.authorization.k8s.io/kuboard-viewer created

1

2

3

4

5

6

7

8

2

3

4

5

6

7

8

查看是否ready

kubectl get pods -l k8s.kuboard.cn/name=kuboard -n kube-system

获取管理员token

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep kuboard-user | awk '{print $1}')

浏览器输入任意节点的ip:32567

输入上一步获取的token

1

2

3

4

5

6

2

3

4

5

6